Sometimes you need to work on a feature which depends on another (unmerged) feature.

In this case, you may create a PR based on that feature instead of the main branch.

Currently, such PRs will be closed without the possibility to reopen in case the parent feature is merged and its branch is deleted.

Automatic target branch change make life a lot easier in such cases.

Github and Bitbucket behave in such way.

Example:

$PR_1$: main <- feature1

$PR_2$: feature1 <- feature2

Currently, merging $PR_1$ and deleting its branch leads to $PR_2$ being closed without the possibility to reopen.

This is both annoying and loses the review history when you open a new PR.

With this change, $PR_2$ will change its target branch to main ($PR_2$: main <- feature2) after $PR_1$ has been merged and its branch has been deleted.

This behavior is enabled by default but can be disabled.

For security reasons, this target branch change will not be executed when merging PRs targeting another repo.

Fixes#27062Fixes#18408

---------

Co-authored-by: Denys Konovalov <kontakt@denyskon.de>

Co-authored-by: delvh <dev.lh@web.de>

Fixes#22236

---

Error occurring currently while trying to revert commit using read-tree

-m approach:

> 2022/12/26 16:04:43 ...rvices/pull/patch.go:240:AttemptThreeWayMerge()

[E] [63a9c61a] Unable to run read-tree -m! Error: exit status 128 -

fatal: this operation must be run in a work tree

> - fatal: this operation must be run in a work tree

We need to clone a non-bare repository for `git read-tree -m` to work.

bb371aee6e

adds support to create a non-bare cloned temporary upload repository.

After cloning a non-bare temporary upload repository, we [set default

index](https://github.com/go-gitea/gitea/blob/main/services/repository/files/cherry_pick.go#L37)

(`git read-tree HEAD`).

This operation ends up resetting the git index file (see investigation

details below), due to which, we need to call `git update-index

--refresh` afterward.

Here's the diff of the index file before and after we execute

SetDefaultIndex: https://www.diffchecker.com/hyOP3eJy/

Notice the **ctime**, **mtime** are set to 0 after SetDefaultIndex.

You can reproduce the same behavior using these steps:

```bash

$ git clone https://try.gitea.io/me-heer/test.git -s -b main

$ cd test

$ git read-tree HEAD

$ git read-tree -m 1f085d7ed8 1f085d7ed8 9933caed00

error: Entry '1' not uptodate. Cannot merge.

```

After which, we can fix like this:

```

$ git update-index --refresh

$ git read-tree -m 1f085d7ed8 1f085d7ed8 9933caed00

```

- Make use of the `form-fetch-action` for the merge button, which will

automatically prevent the action from happening multiple times and show

a nice loading indicator as user feedback while the merge request is

being processed by the server.

- Adjust the merge PR code to JSON response as this is required for the

`form-fetch-action` functionality.

- Resolves https://codeberg.org/forgejo/forgejo/issues/774

- Likely resolves the cause of

https://codeberg.org/forgejo/forgejo/issues/1688#issuecomment-1313044

(cherry picked from commit 4ec64c19507caefff7ddaad722b1b5792b97cc5a)

Co-authored-by: Gusted <postmaster@gusted.xyz>

Fix#28157

This PR fix the possible bugs about actions schedule.

## The Changes

- Move `UpdateRepositoryUnit` and `SetRepoDefaultBranch` from models to

service layer

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when actions unit has been disabled

or global disabled.

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when default branch changed.

Mainly for MySQL/MSSQL.

It is important for Gitea to use case-sensitive database charset

collation. If the database is using a case-insensitive collation, Gitea

will show startup error/warning messages, and show the errors/warnings

on the admin panel's Self-Check page.

Make `gitea doctor convert` work for MySQL to convert the collations of

database & tables & columns.

* Fix#28131

## ⚠️ BREAKING ⚠️

It is not quite breaking, but it's highly recommended to convert the

database&table&column to a consistent and case-sensitive collation.

Gitea prefers to use relative URLs in code (to make multiple domain work

for some users)

So it needs to use `toAbsoluteUrl` to generate a full URL when click

"Reference in New Issues"

And add some comments in the test code

In #26851, it assumed that `Commit` always exists when

`PageIsDiff==true`.

But for a 404 page, the `Commit` doesn't exist, so the following code

would cause panic because nil value can't be passed as string parameter

to `IsMultilineCommitMessage(string)` (or the StringUtils.Cut in later

PRs)

Fix https://github.com/go-gitea/gitea/pull/28547#issuecomment-1867740842

Since https://gitea.com/xorm/xorm/pulls/2383 merged, xorm now supports

UPDATE JOIN.

To keep consistent from different databases, xorm use

`engine.Join().Update`, but the actural generated SQL are different

between different databases.

For MySQL, it's `UPDATE talbe1 JOIN table2 ON join_conditions SET xxx

Where xxx`.

For MSSQL, it's `UPDATE table1 SET xxx FROM TABLE1, TABLE2 WHERE

join_conditions`.

For SQLITE per https://www.sqlite.org/lang_update.html, sqlite support

`UPDATE table1 SET xxx FROM table2 WHERE join conditions` from

3.33.0(2020-8-14).

POSTGRES is the same as SQLITE.

#28361 introduced `syncBranchToDB` in `CreateNewBranchFromCommit`. This

PR will revert the change because it's unnecessary. Every push will

already be checked by `syncBranchToDB`.

This PR also created a test to ensure it's right.

This is a regression from #28220 .

`builder.Cond` will not add `` ` `` automatically but xorm method

`Get/Find` adds `` ` ``.

This PR also adds tests to prevent the method from being implemented

incorrectly. The tests are added in `integrations` to test every

database.

Introduce the new generic deletion methods

- `func DeleteByID[T any](ctx context.Context, id int64) (int64, error)`

- `func DeleteByIDs[T any](ctx context.Context, ids ...int64) error`

- `func Delete[T any](ctx context.Context, opts FindOptions) (int64,

error)`

So, we no longer need any specific deletion method and can just use

the generic ones instead.

Replacement of #28450Closes#28450

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The CORS code has been unmaintained for long time, and the behavior is

not correct.

This PR tries to improve it. The key point is written as comment in

code. And add more tests.

Fix#28515Fix#27642Fix#17098

- Remove `ObjectFormatID`

- Remove function `ObjectFormatFromID`.

- Use `Sha1ObjectFormat` directly but not a pointer because it's an

empty struct.

- Store `ObjectFormatName` in `repository` struct

Refactor Hash interfaces and centralize hash function. This will allow

easier introduction of different hash function later on.

This forms the "no-op" part of the SHA256 enablement patch.

Fix#28056

This PR will check whether the repo has zero branch when pushing a

branch. If that, it means this repository hasn't been synced.

The reason caused that is after user upgrade from v1.20 -> v1.21, he

just push branches without visit the repository user interface. Because

all repositories routers will check whether a branches sync is necessary

but push has not such check.

For every repository, it has two states, synced or not synced. If there

is zero branch for a repository, then it will be assumed as non-sync

state. Otherwise, it's synced state. So if we think it's synced, we just

need to update branch/insert new branch. Otherwise do a full sync. So

that, for every push, there will be almost no extra load added. It's

high performance than yours.

For the implementation, we in fact will try to update the branch first,

if updated success with affect records > 0, then all are done. Because

that means the branch has been in the database. If no record is

affected, that means the branch does not exist in database. So there are

two possibilities. One is this is a new branch, then we just need to

insert the record. Another is the branches haven't been synced, then we

need to sync all the branches into database.

The function `GetByBean` has an obvious defect that when the fields are

empty values, it will be ignored. Then users will get a wrong result

which is possibly used to make a security problem.

To avoid the possibility, this PR removed function `GetByBean` and all

references.

And some new generic functions have been introduced to be used.

The recommand usage like below.

```go

// if query an object according id

obj, err := db.GetByID[Object](ctx, id)

// query with other conditions

obj, err := db.Get[Object](ctx, builder.Eq{"a": a, "b":b})

```

System users (Ghost, ActionsUser, etc) have a negative id and may be the

author of a comment, either because it was created by a now deleted user

or via an action using a transient token.

The GetPossibleUserByID function has special cases related to system

users and will not fail if given a negative id.

Refs: https://codeberg.org/forgejo/forgejo/issues/1425

(cherry picked from commit 6a2d2fa243)

Fixes#27819

We have support for two factor logins with the normal web login and with

basic auth. For basic auth the two factor check was implemented at three

different places and you need to know that this check is necessary. This

PR moves the check into the basic auth itself.

Fixes#27598

In #27080, the logic for the tokens endpoints were updated to allow

admins to create and view tokens in other accounts. However, the same

functionality was not added to the DELETE endpoint. This PR makes the

DELETE endpoint function the same as the other token endpoints and adds unit tests

Closes#27455

> The mechanism responsible for long-term authentication (the 'remember

me' cookie) uses a weak construction technique. It will hash the user's

hashed password and the rands value; it will then call the secure cookie

code, which will encrypt the user's name with the computed hash. If one

were able to dump the database, they could extract those two values to

rebuild that cookie and impersonate a user. That vulnerability exists

from the date the dump was obtained until a user changed their password.

>

> To fix this security issue, the cookie could be created and verified

using a different technique such as the one explained at

https://paragonie.com/blog/2015/04/secure-authentication-php-with-long-term-persistence#secure-remember-me-cookies.

The PR removes the now obsolete setting `COOKIE_USERNAME`.

assert.Fail() will continue to execute the code while assert.FailNow()

not. I thought those uses of assert.Fail() should exit immediately.

PS: perhaps it's a good idea to use

[require](https://pkg.go.dev/github.com/stretchr/testify/require)

somewhere because the assert package's default behavior does not exit

when an error occurs, which makes it difficult to find the root error

reason.

- Currently in the cron tasks, the 'Previous Time' only displays the

previous time of when the cron library executes the function, but not

any of the manual executions of the task.

- Store the last run's time in memory in the Task struct and use that,

when that time is later than time that the cron library has executed

this task.

- This ensures that if an instance admin manually starts a task, there's

feedback that this task is/has been run, because the task might be run

that quick, that the status icon already has been changed to an

checkmark,

- Tasks that are executed at startup now reflect this as well, as the

time of the execution of that task on startup is now being shown as

'Previous Time'.

- Added integration tests for the API part, which is easier to test

because querying the HTML table of cron tasks is non-trivial.

- Resolves https://codeberg.org/forgejo/forgejo/issues/949

(cherry picked from commit fd34fdac14)

---------

Co-authored-by: Gusted <postmaster@gusted.xyz>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: silverwind <me@silverwind.io>

- MySQL 5.7 support and testing is dropped

- MySQL tests now execute against 8.1, up from 5.7 and 8.0

- PostgreSQL 10 and 11 support ist dropped

- PostgreSQL tests now execute against 16, up from 15

- MSSQL 2008 support is dropped

- MSSQL tests now run against locked 2022 version

Fixes: https://github.com/go-gitea/gitea/issues/25657

Ref: https://endoflife.date/mysql

Ref: https://endoflife.date/postgresql

Ref: https://endoflife.date/mssqlserver

## ⚠️ BREAKING ⚠️

Support for MySQL 5.7, PostgreSQL 10 and 11, and MSSQL 2008 is dropped.

You are encouraged to upgrade to supported versions.

---------

Co-authored-by: techknowlogick <techknowlogick@gitea.com>

Part of #27065

This PR touches functions used in templates. As templates are not static

typed, errors are harder to find, but I hope I catch it all. I think

some tests from other persons do not hurt.

Blank Issues should be enabled if they are not explicit disabled through

the `blank_issues_enabled` field of the Issue Config. The Implementation

has currently a Bug: If you create a Issue Config file with only

`contact_links` and without a `blank_issues_enabled` field,

`blank_issues_enabled` is set to false by default.

The fix is only one line, but I decided to also improve the tests to

make sure there are no other problems with the Implementation.

This is a bugfix, so it should be backported to 1.20.

Part of #27065

This reduces the usage of `db.DefaultContext`. I think I've got enough

files for the first PR. When this is merged, I will continue working on

this.

Considering how many files this PR affect, I hope it won't take to long

to merge, so I don't end up in the merge conflict hell.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Currently, Artifact does not have an expiration and automatic cleanup

mechanism, and this feature needs to be added. It contains the following

key points:

- [x] add global artifact retention days option in config file. Default

value is 90 days.

- [x] add cron task to clean up expired artifacts. It should run once a

day.

- [x] support custom retention period from `retention-days: 5` in

`upload-artifact@v3`.

- [x] artifacts link in actions view should be non-clickable text when

expired.

They currently throw a Internal Server Error when you use them without a

token. Now they correctly return a `token is required` error.

This is no security issue. If you use this endpoints with a token that

don't have the correct permission, you get the correct error. This is

not affected by this PR.

1. The old `prepareQueryArg` did double-unescaping of form value.

2. By the way, remove the unnecessary `ctx.Flash = ...` in

`MockContext`.

Co-authored-by: Giteabot <teabot@gitea.io>

Just like `models/unittest`, the testing helper functions should be in a

separate package: `contexttest`

And complete the TODO:

> // TODO: move this function to other packages, because it depends on

"models" package

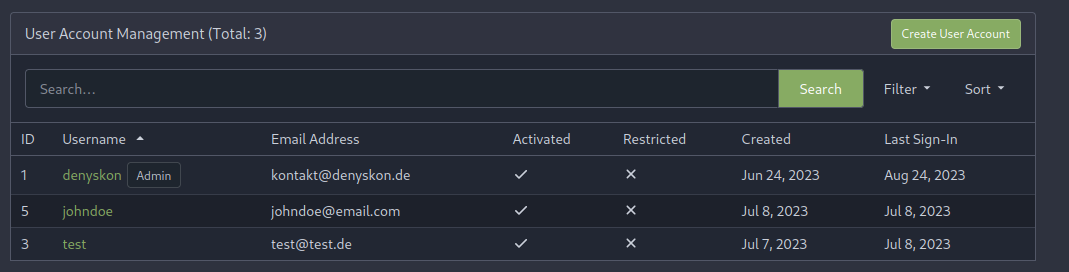

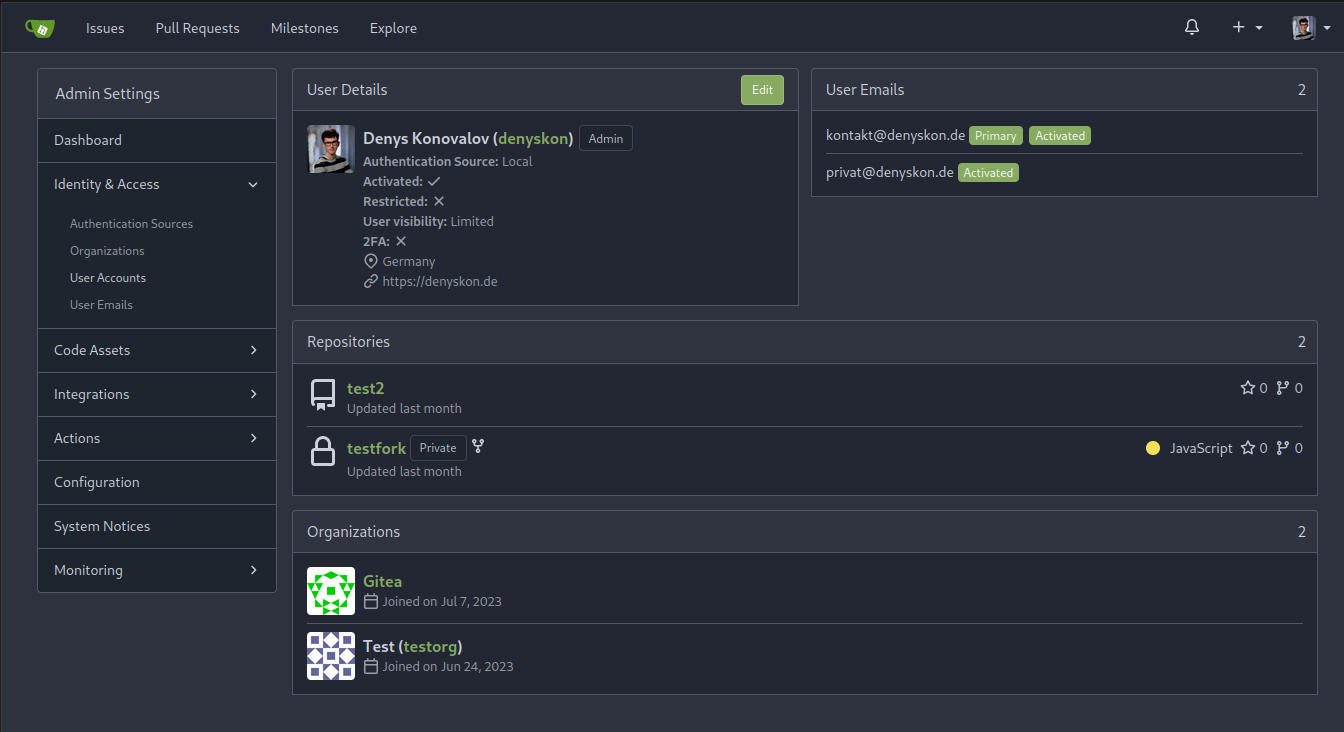

This PR implements a proposal to clean up the admin users table by

moving some information out to a separate user details page (which also

displays some additional information).

Other changes:

- move edit user page from `/admin/users/{id}` to

`/admin/users/{id}/edit` -> `/admin/users/{id}` now shows the user

details page

- show if user is instance administrator as a label instead of a

separate column

- separate explore users template into a page- and a shared one, to make

it possible to use it on the user details page

- fix issue where there was no margin between alert message and

following content on admin pages

<details>

<summary>Screenshots</summary>

</details>

Partially resolves#25939

---------

Co-authored-by: Giteabot <teabot@gitea.io>

- Resolves https://codeberg.org/forgejo/forgejo/issues/580

- Return a `upload_field` to any release API response, which points to

the API URL for uploading new assets.

- Adds unit test.

- Adds integration testing to verify URL is returned correctly and that

upload endpoint actually works

---------

Co-authored-by: Gusted <postmaster@gusted.xyz>

Fixes: #26333.

Previously, this endpoint only updates the `StatusCheckContexts` field

when `EnableStatusCheck==true`, which makes it impossible to clear the

array otherwise.

This patch uses slice `nil`-ness to decide whether to update the list of

checks. The field is ignored when either the client explicitly passes in

a null, or just omits the field from the json ([which causes

`json.Unmarshal` to leave the struct field

unchanged](https://go.dev/play/p/Z2XHOILuB1Q)). I think this is a better

measure of intent than whether the `EnableStatusCheck` flag was set,

because it matches the semantics of other field types.

Also adds a test case. I noticed that [`testAPIEditBranchProtection`

only checks the branch

name](c1c83dbaec/tests/integration/api_branch_test.go (L68))

and no other fields, so I added some extra `GET` calls and specific

checks to make sure the fields are changing properly.

I added those checks the existing integration test; is that the right

place for it?

Fixes#25564Fixes#23191

- Api v2 search endpoint should return only the latest version matching

the query

- Api v3 search endpoint should return `take` packages not package

versions

- The permalink and 'Reference in New issue' URL of an renderable file

(those where you can see the source and a rendered version of it, such

as markdown) doesn't contain `?display=source`. This leads the issue

that the URL doesn't have any effect, as by default the rendered version

is shown and thus not the source.

- Add `?display=source` to the permalink URL and to 'Reference in New

Issue' if it's renderable file.

- Add integration testing.

Refs: https://codeberg.org/forgejo/forgejo/pulls/1088

Co-authored-by: Gusted <postmaster@gusted.xyz>

Co-authored-by: Giteabot <teabot@gitea.io>

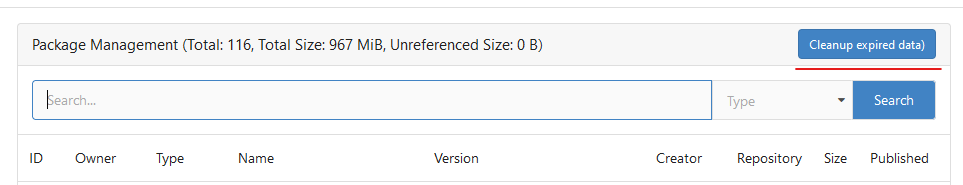

Until now expired package data gets deleted daily by a cronjob. The

admin page shows the size of all packages and the size of unreferenced

data. The users (#25035, #20631) expect the deletion of this data if

they run the cronjob from the admin page but the job only deletes data

older than 24h.

This PR adds a new button which deletes all expired data.

---------

Co-authored-by: silverwind <me@silverwind.io>

Follow #25229

Copy from

https://github.com/go-gitea/gitea/pull/26290#issuecomment-1663135186

The bug is that we cannot get changed files for the

`pull_request_target` event. This event runs in the context of the base

branch, so we won't get any changes if we call

`GetFilesChangedSinceCommit` with `PullRequest.Base.Ref`.

Not too important, but I think that it'd be a pretty neat touch.

Also fixes some layout bugs introduced by a previous PR.

---------

Co-authored-by: Gusted <postmaster@gusted.xyz>

Co-authored-by: Caesar Schinas <caesar@caesarschinas.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fix#24662.

Replace #24822 and #25708 (although it has been merged)

## Background

In the past, Gitea supported issue searching with a keyword and

conditions in a less efficient way. It worked by searching for issues

with the keyword and obtaining limited IDs (as it is heavy to get all)

on the indexer (bleve/elasticsearch/meilisearch), and then querying with

conditions on the database to find a subset of the found IDs. This is

why the results could be incomplete.

To solve this issue, we need to store all fields that could be used as

conditions in the indexer and support both keyword and additional

conditions when searching with the indexer.

## Major changes

- Redefine `IndexerData` to include all fields that could be used as

filter conditions.

- Refactor `Search(ctx context.Context, kw string, repoIDs []int64,

limit, start int, state string)` to `Search(ctx context.Context, options

*SearchOptions)`, so it supports more conditions now.

- Change the data type stored in `issueIndexerQueue`. Use

`IndexerMetadata` instead of `IndexerData` in case the data has been

updated while it is in the queue. This also reduces the storage size of

the queue.

- Enhance searching with Bleve/Elasticsearch/Meilisearch, make them

fully support `SearchOptions`. Also, update the data versions.

- Keep most logic of database indexer, but remove

`issues.SearchIssueIDsByKeyword` in `models` to avoid confusion where is

the entry point to search issues.

- Start a Meilisearch instance to test it in unit tests.

- Add unit tests with almost full coverage to test

Bleve/Elasticsearch/Meilisearch indexer.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The API should only return the real Mail of a User, if the caller is

logged in. The check do to this don't work. This PR fixes this. This not

really a security issue, but can lead to Spam.

---------

Co-authored-by: silverwind <me@silverwind.io>

Fix#25934

Add `ignoreGlobal` parameter to `reqUnitAccess` and only check global

disabled units when `ignoreGlobal` is true. So the org-level projects

and user-level projects won't be affected by global disabled

`repo.projects` unit.

The version listed in rpm repodata should only contain the rpm version

(1.0.0) and not the combination of version and release (1.0.0-2). We

correct this behaviour in primary.xml.gz, filelists.xml.gz and

others.xml.gz.

Signed-off-by: Peter Verraedt <peter@verraedt.be>

Replace #25892

Close #21942

Close #25464

Major changes:

1. Serve "robots.txt" and ".well-known/security.txt" in the "public"

custom path

* All files in "public/.well-known" can be served, just like

"public/assets"

3. Add a test for ".well-known/security.txt"

4. Simplify the "FileHandlerFunc" logic, now the paths are consistent so

the code can be simpler

5. Add CORS header for ".well-known" endpoints

6. Add logs to tell users they should move some of their legacy custom

public files

```

2023/07/19 13:00:37 cmd/web.go:178:serveInstalled() [E] Found legacy public asset "img" in CustomPath. Please move it to /work/gitea/custom/public/assets/img

2023/07/19 13:00:37 cmd/web.go:182:serveInstalled() [E] Found legacy public asset "robots.txt" in CustomPath. Please move it to /work/gitea/custom/public/robots.txt

```

This PR is not breaking.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Replace #10912

And there are many new tests to cover the CLI behavior

There were some concerns about the "option order in hook scripts"

(https://github.com/go-gitea/gitea/pull/10912#issuecomment-1137543314),

it's not a problem now. Because the hook script uses `/gitea hook

--config=/app.ini pre-receive` format. The "config" is a global option,

it can appear anywhere.

----

## ⚠️ BREAKING ⚠️

This PR does it best to avoid breaking anything. The major changes are:

* `gitea` itself won't accept web's options: `--install-port` / `--pid`

/ `--port` / `--quiet` / `--verbose` .... They are `web` sub-command's

options.

* Use `./gitea web --pid ....` instead

* `./gitea` can still run the `web` sub-command as shorthand, with

default options

* The sub-command's options must follow the sub-command

* Before: `./gitea --sub-opt subcmd` might equal to `./gitea subcmd

--sub-opt` (well, might not ...)

* After: only `./gitea subcmd --sub-opt` could be used

* The global options like `--config` are not affected

Fix#25776. Close#25826.

In the discussion of #25776, @wolfogre's suggestion was to remove the

commit status of `running` and `warning` to keep it consistent with

github.

references:

-

https://docs.github.com/en/rest/commits/statuses?apiVersion=2022-11-28#about-commit-statuses

## ⚠️ BREAKING ⚠️

So the commit status of Gitea will be consistent with GitHub, only

`pending`, `success`, `error` and `failure`, while `warning` and

`running` are not supported anymore.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

current actions artifacts implementation only support single file

artifact. To support multiple files uploading, it needs:

- save each file to each db record with same run-id, same artifact-name

and proper artifact-path

- need change artifact uploading url without artifact-id, multiple files

creates multiple artifact-ids

- support `path` in download-artifact action. artifact should download

to `{path}/{artifact-path}`.

- in repo action view, it provides zip download link in artifacts list

in summary page, no matter this artifact contains single or multiple

files.

Before: the concept "Content string" is used everywhere. It has some

problems:

1. Sometimes it means "base64 encoded content", sometimes it means "raw

binary content"

2. It doesn't work with large files, eg: uploading a 1G LFS file would

make Gitea process OOM

This PR does the refactoring: use "ContentReader" / "ContentBase64"

instead of "Content"

This PR is not breaking because the key in API JSON is still "content":

`` ContentBase64 string `json:"content"` ``

we refactored `userIDFromToken` for the token parsing part into a new

function `parseToken`. `parseToken` returns the string `token` from

request, and a boolean `ok` representing whether the token exists or

not. So we can distinguish between token non-existence and token

inconsistency in the `verfity` function, thus solving the problem of no

proper error message when the token is inconsistent.

close#24439

related #22119

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Giteabot <teabot@gitea.io>

Fixes (?) #25538

Fixes https://codeberg.org/forgejo/forgejo/issues/972

Regression #23879#23879 introduced a change which prevents read access to packages if a

user is not a member of an organization.

That PR also contained a change which disallows package access if the

team unit is configured with "no access" for packages. I don't think

this change makes sense (at the moment). It may be relevant for private

orgs. But for public or limited orgs that's useless because an

unauthorized user would have more access rights than the team member.

This PR restores the old behaviour "If a user has read access for an

owner, they can read packages".

---------

Co-authored-by: Giteabot <teabot@gitea.io>

related #16865

This PR adds an accessibility check before mounting container blobs.

---------

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Co-authored-by: silverwind <me@silverwind.io>

This PR will display a pull request creation hint on the repository home

page when there are newly created branches with no pull request. Only

the recent 6 hours and 2 updated branches will be displayed.

Inspired by #14003

Replace #14003Resolves#311Resolves#13196Resolves#23743

co-authored by @kolaente

Follow #25229

At present, when the trigger event is `pull_request_target`, the `ref`

and `sha` of `ActionRun` are set according to the base branch of the

pull request. This makes it impossible for us to find the head branch of

the `ActionRun` directly. In this PR, the `ref` and `sha` will always be

set to the head branch and they will be changed to the base branch when

generating the task context.

Fixes#24723

Direct serving of content aka HTTP redirect is not mentioned in any of

the package registry specs but lots of official registries do that so it

should be supported by the usual clients.

Fix#25558

Extract from #22743

This PR added a repository's check when creating/deleting branches via

API. Mirror repository and archive repository cannot do that.

This adds an API for uploading and Deleting Avatars for of Users, Repos

and Organisations. I'm not sure, if this should also be added to the

Admin API.

Resolves#25344

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Related #14180

Related #25233

Related #22639Close#19786

Related #12763

This PR will change all the branches retrieve method from reading git

data to read database to reduce git read operations.

- [x] Sync git branches information into database when push git data

- [x] Create a new table `Branch`, merge some columns of `DeletedBranch`

into `Branch` table and drop the table `DeletedBranch`.

- [x] Read `Branch` table when visit `code` -> `branch` page

- [x] Read `Branch` table when list branch names in `code` page dropdown

- [x] Read `Branch` table when list git ref compare page

- [x] Provide a button in admin page to manually sync all branches.

- [x] Sync branches if repository is not empty but database branches are

empty when visiting pages with branches list

- [x] Use `commit_time desc` as the default FindBranch order by to keep

consistent as before and deleted branches will be always at the end.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Fix#25088

This PR adds the support for

[`pull_request_target`](https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#pull_request_target)

workflow trigger. `pull_request_target` is similar to `pull_request`,

but the workflow triggered by the `pull_request_target` event runs in

the context of the base branch of the pull request rather than the head

branch. Since the workflow from the base is considered trusted, it can

access the secrets and doesn't need approvals to run.

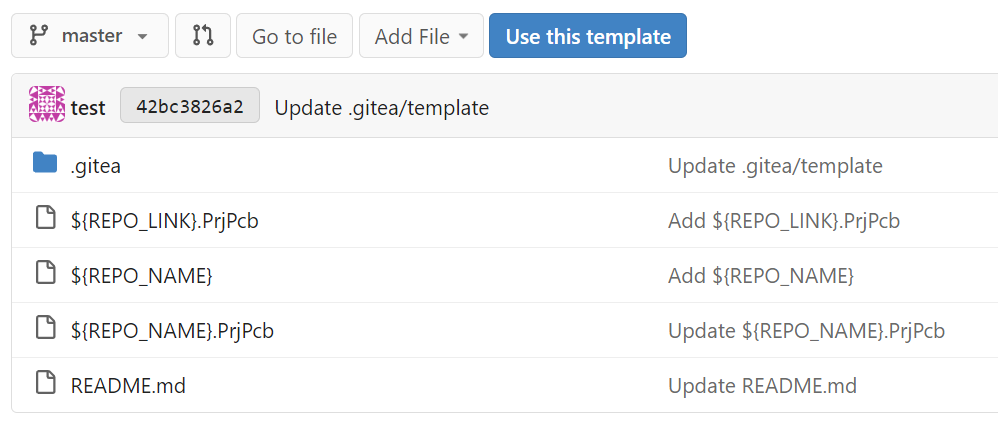

### Summary

Extend the template variable substitution to replace file paths. This

can be helpful for setting up log files & directories that should match

the repository name.

### PR Changes

- Move files matching glob pattern when setting up repos from template

- For security, added ~escaping~ sanitization for cross-platform support

and to prevent directory traversal (thanks @silverwind for the

reference)

- Added unit testing for escaping function

- Fixed the integration tests for repo template generation by passing

the repo_template_id

- Updated the integration testfiles to add some variable substitution &

assert the outputs

I had to fix the existing repo template integration test and extend it

to add a check for variable substitutions.

Example:

Fix#21072

Username Attribute is not a required item when creating an

authentication source. If Username Attribute is empty, the username

value of LDAP user cannot be read, so all users from LDAP will be marked

as inactive by mistake when synchronizing external users.

This PR improves the sync logic, if username is empty, the email address

will be used to find user.

1. The "web" package shouldn't depends on "modules/context" package,

instead, let each "web context" register themselves to the "web"

package.

2. The old Init/Free doesn't make sense, so simplify it

* The ctx in "Init(ctx)" is never used, and shouldn't be used that way

* The "Free" is never called and shouldn't be called because the SSPI

instance is shared

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Clarify the "link-action" behavior:

> // A "link-action" can post AJAX request to its "data-url"

> // Then the browser is redirect to: the "redirect" in response, or

"data-redirect" attribute, or current URL by reloading.

And enhance the "link-action" to support showing a modal dialog for

confirm. A similar general approach could also help PRs like

https://github.com/go-gitea/gitea/pull/22344#discussion_r1062883436

> // If the "link-action" has "data-modal-confirm(-html)" attribute, a

confirm modal dialog will be shown before taking action.

And a lot of duplicate code can be removed now. A good framework design

can help to avoid code copying&pasting.

---------

Co-authored-by: silverwind <me@silverwind.io>

## Changes

- Adds the following high level access scopes, each with `read` and

`write` levels:

- `activitypub`

- `admin` (hidden if user is not a site admin)

- `misc`

- `notification`

- `organization`

- `package`

- `issue`

- `repository`

- `user`

- Adds new middleware function `tokenRequiresScopes()` in addition to

`reqToken()`

- `tokenRequiresScopes()` is used for each high-level api section

- _if_ a scoped token is present, checks that the required scope is

included based on the section and HTTP method

- `reqToken()` is used for individual routes

- checks that required authentication is present (but does not check

scope levels as this will already have been handled by

`tokenRequiresScopes()`

- Adds migration to convert old scoped access tokens to the new set of

scopes

- Updates the user interface for scope selection

### User interface example

<img width="903" alt="Screen Shot 2023-05-31 at 1 56 55 PM"

src="https://github.com/go-gitea/gitea/assets/23248839/654766ec-2143-4f59-9037-3b51600e32f3">

<img width="917" alt="Screen Shot 2023-05-31 at 1 56 43 PM"

src="https://github.com/go-gitea/gitea/assets/23248839/1ad64081-012c-4a73-b393-66b30352654c">

## tokenRequiresScopes Design Decision

- `tokenRequiresScopes()` was added to more reliably cover api routes.

For an incoming request, this function uses the given scope category

(say `AccessTokenScopeCategoryOrganization`) and the HTTP method (say

`DELETE`) and verifies that any scoped tokens in use include

`delete:organization`.

- `reqToken()` is used to enforce auth for individual routes that

require it. If a scoped token is not present for a request,

`tokenRequiresScopes()` will not return an error

## TODO

- [x] Alphabetize scope categories

- [x] Change 'public repos only' to a radio button (private vs public).

Also expand this to organizations

- [X] Disable token creation if no scopes selected. Alternatively, show

warning

- [x] `reqToken()` is missing from many `POST/DELETE` routes in the api.

`tokenRequiresScopes()` only checks that a given token has the correct

scope, `reqToken()` must be used to check that a token (or some other

auth) is present.

- _This should be addressed in this PR_

- [x] The migration should be reviewed very carefully in order to

minimize access changes to existing user tokens.

- _This should be addressed in this PR_

- [x] Link to api to swagger documentation, clarify what

read/write/delete levels correspond to

- [x] Review cases where more than one scope is needed as this directly

deviates from the api definition.

- _This should be addressed in this PR_

- For example:

```go

m.Group("/users/{username}/orgs", func() {

m.Get("", reqToken(), org.ListUserOrgs)

m.Get("/{org}/permissions", reqToken(), org.GetUserOrgsPermissions)

}, tokenRequiresScopes(auth_model.AccessTokenScopeCategoryUser,

auth_model.AccessTokenScopeCategoryOrganization),

context_service.UserAssignmentAPI())

```

## Future improvements

- [ ] Add required scopes to swagger documentation

- [ ] Redesign `reqToken()` to be opt-out rather than opt-in

- [ ] Subdivide scopes like `repository`

- [ ] Once a token is created, if it has no scopes, we should display

text instead of an empty bullet point

- [ ] If the 'public repos only' option is selected, should read

categories be selected by default

Closes#24501Closes#24799

Co-authored-by: Jonathan Tran <jon@allspice.io>

Co-authored-by: Kyle D <kdumontnu@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>

The PKCE flow according to [RFC

7636](https://datatracker.ietf.org/doc/html/rfc7636) allows for secure

authorization without the requirement to provide a client secret for the

OAuth app.

It is implemented in Gitea since #5378 (v1.8.0), however without being

able to omit client secret.

Since #21316 Gitea supports setting client type at OAuth app

registration.

As public clients are already forced to use PKCE since #21316, in this

PR the client secret check is being skipped if a public client is

detected. As Gitea seems to implement PKCE authorization correctly

according to the spec, this would allow for PKCE flow without providing

a client secret.

Also add some docs for it, please check language as I'm not a native

English speaker.

Closes#17107Closes#25047

The admin config page has been broken for many many times, a little

refactoring would make this page panic.

So, add a test for it, and add another test to cover the 500 error page.

Co-authored-by: Giteabot <teabot@gitea.io>

This PR creates an API endpoint for creating/updating/deleting multiple

files in one API call similar to the solution provided by

[GitLab](https://docs.gitlab.com/ee/api/commits.html#create-a-commit-with-multiple-files-and-actions).

To archive this, the CreateOrUpdateRepoFile and DeleteRepoFIle functions

in files service are unified into one function supporting multiple files

and actions.

Resolves#14619

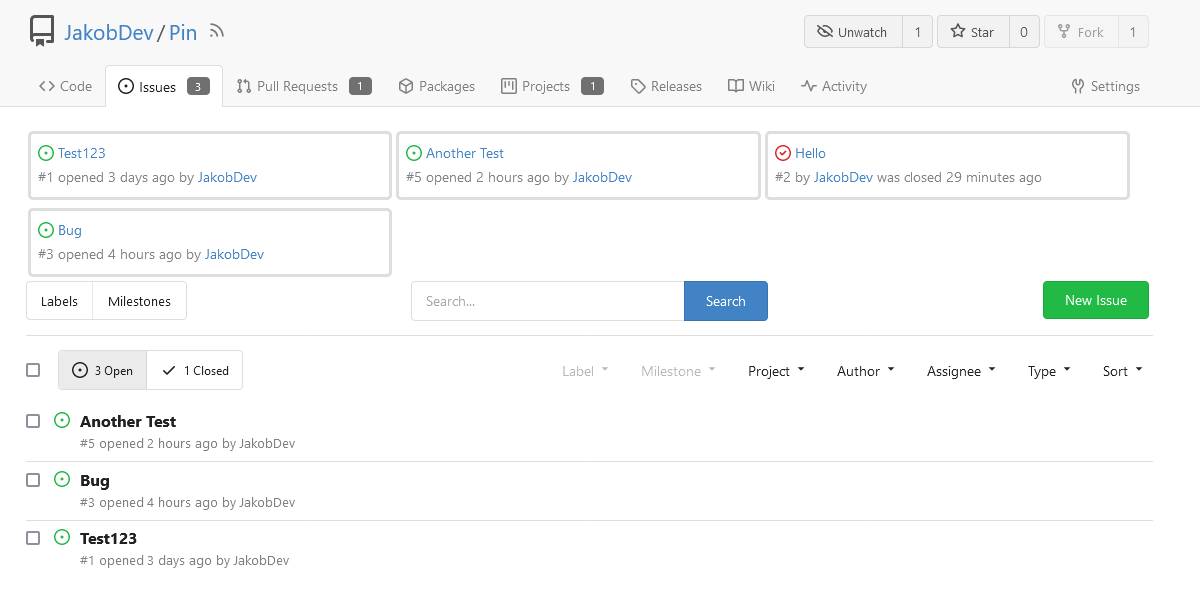

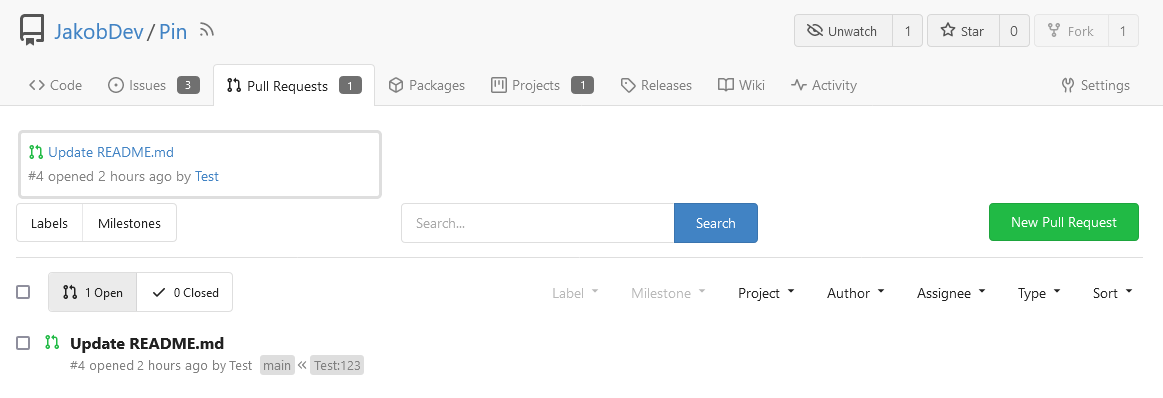

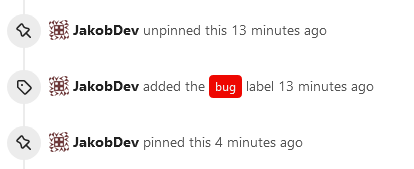

This adds the ability to pin important Issues and Pull Requests. You can

also move pinned Issues around to change their Position. Resolves#2175.

## Screenshots

The Design was mostly copied from the Projects Board.

## Implementation

This uses a new `pin_order` Column in the `issue` table. If the value is

set to 0, the Issue is not pinned. If it's set to a bigger value, the

value is the Position. 1 means it's the first pinned Issue, 2 means it's

the second one etc. This is dived into Issues and Pull requests for each

Repo.

## TODO

- [x] You can currently pin as many Issues as you want. Maybe we should

add a Limit, which is configurable. GitHub uses 3, but I prefer 6, as

this is better for bigger Projects, but I'm open for suggestions.

- [x] Pin and Unpin events need to be added to the Issue history.

- [x] Tests

- [x] Migration

**The feature itself is currently fully working, so tester who may find

weird edge cases are very welcome!**

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

## ⚠️ Breaking

The `log.<mode>.<logger>` style config has been dropped. If you used it,

please check the new config manual & app.example.ini to make your

instance output logs as expected.

Although many legacy options still work, it's encouraged to upgrade to

the new options.

The SMTP logger is deleted because SMTP is not suitable to collect logs.

If you have manually configured Gitea log options, please confirm the

logger system works as expected after upgrading.

## Description

Close#12082 and maybe more log-related issues, resolve some related

FIXMEs in old code (which seems unfixable before)

Just like rewriting queue #24505 : make code maintainable, clear legacy

bugs, and add the ability to support more writers (eg: JSON, structured

log)

There is a new document (with examples): `logging-config.en-us.md`

This PR is safer than the queue rewriting, because it's just for

logging, it won't break other logic.

## The old problems

The logging system is quite old and difficult to maintain:

* Unclear concepts: Logger, NamedLogger, MultiChannelledLogger,

SubLogger, EventLogger, WriterLogger etc

* Some code is diffuclt to konw whether it is right:

`log.DelNamedLogger("console")` vs `log.DelNamedLogger(log.DEFAULT)` vs

`log.DelLogger("console")`

* The old system heavily depends on ini config system, it's difficult to

create new logger for different purpose, and it's very fragile.

* The "color" trick is difficult to use and read, many colors are

unnecessary, and in the future structured log could help

* It's difficult to add other log formats, eg: JSON format

* The log outputer doesn't have full control of its goroutine, it's

difficult to make outputer have advanced behaviors

* The logs could be lost in some cases: eg: no Fatal error when using

CLI.

* Config options are passed by JSON, which is quite fragile.

* INI package makes the KEY in `[log]` section visible in `[log.sub1]`

and `[log.sub1.subA]`, this behavior is quite fragile and would cause

more unclear problems, and there is no strong requirement to support

`log.<mode>.<logger>` syntax.

## The new design

See `logger.go` for documents.

## Screenshot

<details>

</details>

## TODO

* [x] add some new tests

* [x] fix some tests

* [x] test some sub-commands (manually ....)

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Giteabot <teabot@gitea.io>

This PR is a refactor at the beginning. And now it did 4 things.

- [x] Move renaming organizaiton and user logics into services layer and

merged as one function

- [x] Support rename a user capitalization only. For example, rename the

user from `Lunny` to `lunny`. We just need to change one table `user`

and others should not be touched.

- [x] Before this PR, some renaming were missed like `agit`

- [x] Fix bug the API reutrned from `http.StatusNoContent` to `http.StatusOK`

fix#12192 Support SSH for go get

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

Co-authored-by: mfk <mfk@hengwei.com.cn>

Co-authored-by: silverwind <me@silverwind.io>

Fixes#24145

To solve the bug, I added a "computed" `TargetBehind` field to the

`Release` model, which indicates the target branch of a release.

This is particularly useful if the target branch was deleted in the

meantime (or is empty).

I also did a micro-optimization in `calReleaseNumCommitsBehind`. Instead

of checking that a branch exists and then call `GetBranchCommit`, I

immediately call `GetBranchCommit` and handle the `git.ErrNotExist`

error.

This optimization is covered by the added unit test.

The `GetAllCommits` endpoint can be pretty slow, especially in repos

with a lot of commits. The issue is that it spends a lot of time

calculating information that may not be useful/needed by the user.

The `stat` param was previously added in #21337 to address this, by

allowing the user to disable the calculating stats for each commit. But

this has two issues:

1. The name `stat` is rather misleading, because disabling `stat`

disables the Stat **and** Files. This should be separated out into two

different params, because getting a list of affected files is much less

expensive than calculating the stats

2. There's still other costly information provided that the user may not

need, such as `Verification`

This PR, adds two parameters to the endpoint, `files` and `verification`

to allow the user to explicitly disable this information when listing

commits. The default behavior is true.

This fixes up https://github.com/go-gitea/gitea/pull/10446; in that, the

*expected* timezone was changed to the local timezone, but the computed

timezone was left in UTC.

The result was this failure, when run on a non-UTC system:

```

Diff:

--- Expected

+++ Actual

@@ -5,3 +5,3 @@

commitMsg: (string) (len=12) "init project",

- commitTime: (string) (len=29) "Wed, 14 Jun 2017 09:54:21 EDT"

+ commitTime: (string) (len=29) "Wed, 14 Jun 2017 13:54:21 UTC"

},

@@ -11,3 +11,3 @@

commitMsg: (string) (len=12) "init project",

- commitTime: (string) (len=29) "Wed, 14 Jun 2017 09:54:21 EDT"

+ commitTime: (string) (len=29) "Wed, 14 Jun 2017 13:54:21 UTC"

}

Test: TestViewRepo2

```

I assume this was probably missed since the CI servers all run in UTC?

The Format() string "Mon, 02 Jan 2006 15:04:05 UTC" was incorrect: 'UTC'

isn't recognized as a variable placeholder, but was just being copied

verbatim. It should use 'MST' in order to command Format() to output the

attached timezone, which is what `time.RFC1123` has.

# ⚠️ Breaking

Many deprecated queue config options are removed (actually, they should

have been removed in 1.18/1.19).

If you see the fatal message when starting Gitea: "Please update your

app.ini to remove deprecated config options", please follow the error

messages to remove these options from your app.ini.

Example:

```

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].ISSUE_INDEXER_QUEUE_TYPE`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].UPDATE_BUFFER_LEN`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [F] Please update your app.ini to remove deprecated config options

```

Many options in `[queue]` are are dropped, including:

`WRAP_IF_NECESSARY`, `MAX_ATTEMPTS`, `TIMEOUT`, `WORKERS`,

`BLOCK_TIMEOUT`, `BOOST_TIMEOUT`, `BOOST_WORKERS`, they can be removed

from app.ini.

# The problem

The old queue package has some legacy problems:

* complexity: I doubt few people could tell how it works.

* maintainability: Too many channels and mutex/cond are mixed together,

too many different structs/interfaces depends each other.

* stability: due to the complexity & maintainability, sometimes there

are strange bugs and difficult to debug, and some code doesn't have test

(indeed some code is difficult to test because a lot of things are mixed

together).

* general applicability: although it is called "queue", its behavior is

not a well-known queue.

* scalability: it doesn't seem easy to make it work with a cluster

without breaking its behaviors.

It came from some very old code to "avoid breaking", however, its

technical debt is too heavy now. It's a good time to introduce a better

"queue" package.

# The new queue package

It keeps using old config and concept as much as possible.

* It only contains two major kinds of concepts:

* The "base queue": channel, levelqueue, redis

* They have the same abstraction, the same interface, and they are

tested by the same testing code.

* The "WokerPoolQueue", it uses the "base queue" to provide "worker

pool" function, calls the "handler" to process the data in the base

queue.

* The new code doesn't do "PushBack"

* Think about a queue with many workers, the "PushBack" can't guarantee

the order for re-queued unhandled items, so in new code it just does

"normal push"

* The new code doesn't do "pause/resume"

* The "pause/resume" was designed to handle some handler's failure: eg:

document indexer (elasticsearch) is down

* If a queue is paused for long time, either the producers blocks or the

new items are dropped.

* The new code doesn't do such "pause/resume" trick, it's not a common

queue's behavior and it doesn't help much.

* If there are unhandled items, the "push" function just blocks for a

few seconds and then re-queue them and retry.

* The new code doesn't do "worker booster"

* Gitea's queue's handlers are light functions, the cost is only the

go-routine, so it doesn't make sense to "boost" them.

* The new code only use "max worker number" to limit the concurrent

workers.

* The new "Push" never blocks forever

* Instead of creating more and more blocking goroutines, return an error

is more friendly to the server and to the end user.

There are more details in code comments: eg: the "Flush" problem, the

strange "code.index" hanging problem, the "immediate" queue problem.

Almost ready for review.

TODO:

* [x] add some necessary comments during review

* [x] add some more tests if necessary

* [x] update documents and config options

* [x] test max worker / active worker

* [x] re-run the CI tasks to see whether any test is flaky

* [x] improve the `handleOldLengthConfiguration` to provide more

friendly messages

* [x] fine tune default config values (eg: length?)

## Code coverage:

Due to #24409 , we can now specify '--not' when getting all commits from

a repo to exclude commits from a different branch.

When I wrote that PR, I forgot to also update the code that counts the

number of commits in the repo. So now, if the --not option is used, it

may return too many commits, which can indicate that another page of

data is available when it is not.

This PR passes --not to the commands that count the number of commits in

a repo

This gives more "freshness" to the explore page. So it's not just the

same X users on the explore page by default, now it matches the same

sort as the repos on the explore page.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>